While Taylor Swift was back in rehearsals for the next leg of her Eras Tour, sexually explicit deepfake images of Taylor Swift went viral on X. Unlike the millions of Swifties and Taylor’s powerful team who have her back, many young women are facing this trauma alone and with no legal recourse.

A few months ago, female students at an Issaquah High School fell victim to artificial intelligence (AI)-generated pornographic images when a teenage boy took photos of several of his classmates and used generative AI to alter the photos to create fake nude photos, or deepfakes of these girls. He then sent the photos around the school, humiliating these students.

The term “deepfake” is a combination of “deep learning” and “fake.” Deepfake photos, videos, and audio have been used in politics, Hollywood, and even to create an image of Pope Francis wearing a Balenciaga puffer jacket. Deepfakes are created by altering or completely fabricating audio, video, or other digital media using advanced AI algorithms. This process makes it seem as though a person is saying or doing something they never actually did. Deepfakes are generated when the creator of a video uses an image or likeness of one person’s face and swaps it with a different face or body, employing sophisticated artificial intelligence and facial recognition algorithms.

Deepfake nude photos have been weaponized disproportionately against women, for purposes of humiliation, harassment and abuse. Nude fakes are explicitly detailed, posted on popular porn sites, or circulated among social circles. Sensity AI, a firm specializing in deepfake research, has been monitoring online deepfake videos since December 2018. Their findings indicate that approximately 90% to 95% of these videos are nonconsensual pornography. Furthermore, around 90% of this nonconsensual pornographic content features women.1

Determining the legality of deep fakes is a complex issue, presenting victims with a myriad of perplexing choices. Existing laws may not adequately cover the nuances of deepfakes, leading to a complex legal landscape. Victims of deepfakes could potentially seek recourse under several legal theories. These might include defamation, if the deepfake falsely harms someone’s reputation; invasion of privacy, particularly if the deepfake uses one’s likeness without consent; and intentional infliction of emotional distress, given the potential for deepfakes to cause significant psychological harm. Of course, this assumes the victim can identify the creator and platform used to design the harmful image.

Regulating the creation and distribution of deepfakes is fraught with challenges. The rapid advancement of AI makes it difficult for regulatory measures to keep pace. This is particularly true given that deepfake images and videos are being created using open-source software.

While finding a solution to deepfakes is challenging, it’s imperative for legislators to tackle the issue of digital abuse that impacts countless lives. This abuse leads to ruined reputations, devastating personal impacts, and results in humiliation and harassment.

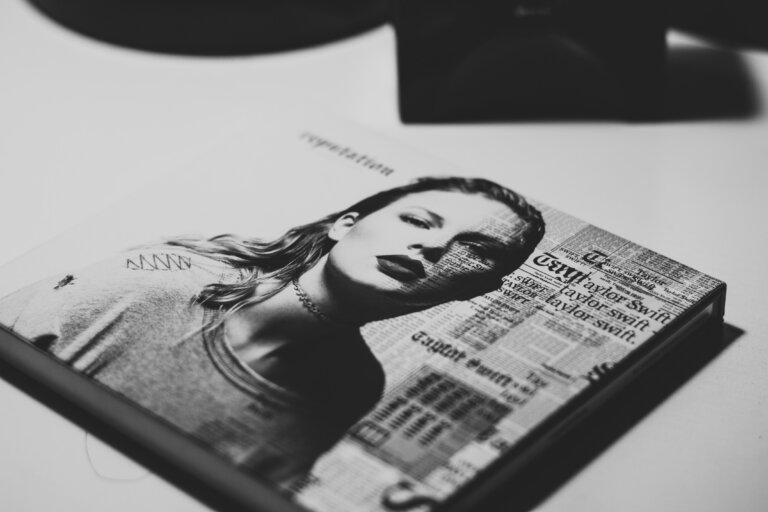

Photo caption: Taylor Swift album. Photo by Rosa Rafael on Unsplash

- Karen Hao, “Deepfake Porn Is Ruining Women’s Lives. Now the Law May Finally Ban It.,” MIT Technology ↩︎